Reduce Complexity with Production-Grade Kubernetes

Editor – This post is part of a 10-part series:

- Reduce Complexity with Production-Grade Kubernetes (this post)

- How to Improve Resilience in Kubernetes with Advanced Traffic Management

- How to Improve Visibility in Kubernetes

- Six Ways to Secure Kubernetes Using Traffic Management Tools

- A Guide to Choosing an Ingress Controller, Part 1: Identify Your Requirements

- A Guide to Choosing an Ingress Controller, Part 2: Risks and Future-Proofing

- A Guide to Choosing an Ingress Controller, Part 3: Open Source vs. Default vs. Commercial

- A Guide to Choosing an Ingress Controller, Part 4: NGINX Ingress Controller Options

- How to Choose a Service Mesh

- Performance Testing NGINX Ingress Controllers in a Dynamic Kubernetes Cloud Environment

You can also download the complete set of blogs as a free eBook – Taking Kubernetes from Test to Production.

2020 was a year that few of us will ever forget. The abrupt shuttering of schools, businesses, and public services left us suddenly isolated from our communities and thrown into uncertainty about our safety and financial stability. Now imagine for a moment that this had happened in 2000, or even 2010. What would be different? Technology. Without the high‑quality digital services that we take for granted – healthcare, streaming video, remote collaboration tools – a pandemic would be a very different experience. What made the technology of 2020 so different from past decades? Containers and microservices.

Microservices architectures – which generally make use of containers and Kubernetes – fuel business growth and innovation by reducing time to market for digital experiences. Whether alongside traditional architectures or as a standalone, these modern app technologies enable superior scalability and flexibility, faster deployments, and even cost savings.

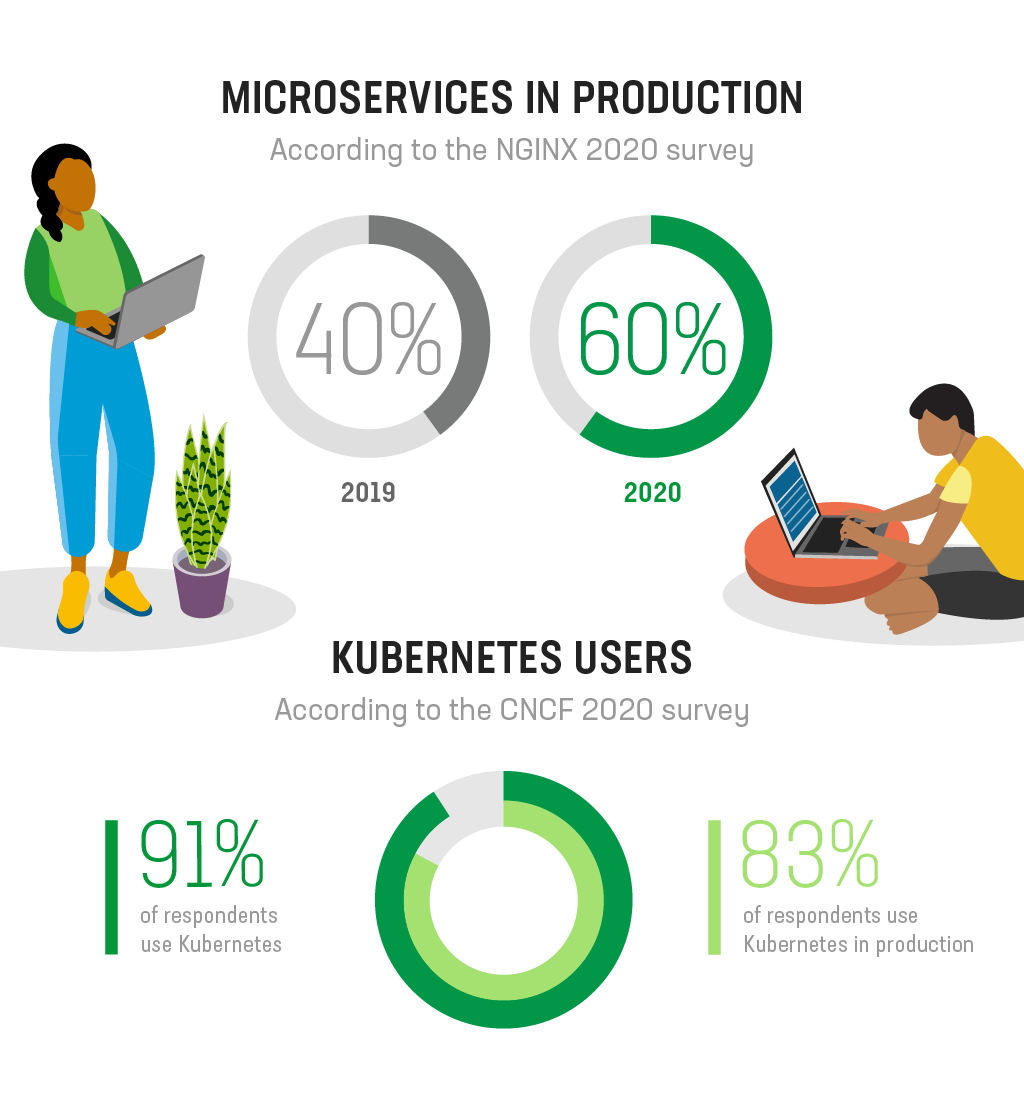

Prior to 2020, we found that most of our customers had already started adopting microservices as part of their digital transformation strategy, but the pandemic truly accelerated app modernization. Our 2020 survey of NGINX users found that 60% of respondents are using microservices in production, up from 40% in 2019, and containers are more than twice as popular as other modern app technologies.

Kubernetes is the de facto standard for managing containerized apps, as evidenced by the Cloud Native Computing Foundation (CNCF)’s 2020 survey, which found that 91% of respondents are using Kubernetes – 83% of them in production. When adopting Kubernetes, many organizations are prepared for substantial architectural changes but are surprised by the organizational impacts of running modern app technologies at scale. If you’re running Kubernetes, you’ve likely encountered all three of these business‑critical barriers:

- Culture

Even as app teams adopt modern approaches like agile development and DevOps, they usually remain subject to Conway’s Law, which states that “organizations design systems that mirror their own communication structure”. In other words, distributed applications are developed by distributed teams that operate independently but share resources. While this structure is ideal for enabling teams to run fast, it also encourages the establishment of siloes. Consequences include poor communication (which has its own consequences), security vulnerabilities, tool sprawl, inconsistent automation practices, and clashes between teams. - Complexity

To implement enterprise‑grade microservices technologies, organizations must piece together a suite of critical components that provide visibility, security, and traffic management. Typically, teams use infrastructure platforms, cloud‑native services, and open source tools to fill this need. While there is a place for each of these strategies, each has drawbacks that can contribute to complexity. And all too often different teams within a single organization choose different strategies to satisfy the same requirements, resulting in “operational debt”. Further, teams choose processes and tools at a point in time, and continue to use them regardless of the changing requirements around deploying and running modern microservices‑driven applications using containers.The CNCF Cloud Native Interactive Landscape is a good illustration of the complexity of the infrastructure necessary to support microservices‑based applications. Organizations need to become proficient in a wide range of disparate technologies, with consequences that include infrastructure lock‑in, shadow IT, tool sprawl, and a steep learning curve for those tasked with maintaining the infrastructure.

- Security

Security requirements differ significantly for cloud‑native and traditional apps, because strategies such as ring‑fenced security aren’t viable in Kubernetes. The large ecosystem and the distributed nature of containerized apps means the attack surface is much larger, and the reliance on external SaaS applications means that employees and outsiders have many more opportunities to inject malicious code or exfiltrate information. Further, the consequences outlined in the culture and complexity areas – tool sprawl, in particular – have a direct impact on the security and resiliency of your modern apps. Using different tools around your ecosystem to solve the same problem is not just inefficient – it creates a huge challenge for SecOps teams who must learn how to properly configure each component.

The Solution: Production-Grade Kubernetes

As with most organizational problems, the answer to overcoming the challenges of Kubernetes is a combination of technology and processes. We’re going to focus on the technology component for the remainder of this post, but keep an eye out for future blogs on process and other topics.

Because Kubernetes is an open source technology, there are numerous ways to implement it. While some organizations prefer to roll their own vanilla Kubernetes, many find value in the combination of flexibility, prescriptiveness, and support provided by services such as Amazon Elastic Kubernetes Service (EKS), Google Kubernetes Engine (GKE), Microsoft Azure Kubernetes Service (AKS), Red Hat OpenShift Container Platform, and Rancher.

Kubernetes platforms can make it easy to get up and running; however, they focus on breadth of services rather than depth. So, while you may get all the services you need in one place, they’re unlikely to offer the feature sets you need for true production readiness at scale. Namely, they don’t focus on advanced networking and security, which is where we see Kubernetes disappointing many customers.

To make Kubernetes production‑grade, you need to add three more components in this order:

A scalable ingress‑egress tier to get traffic in and out of the cluster

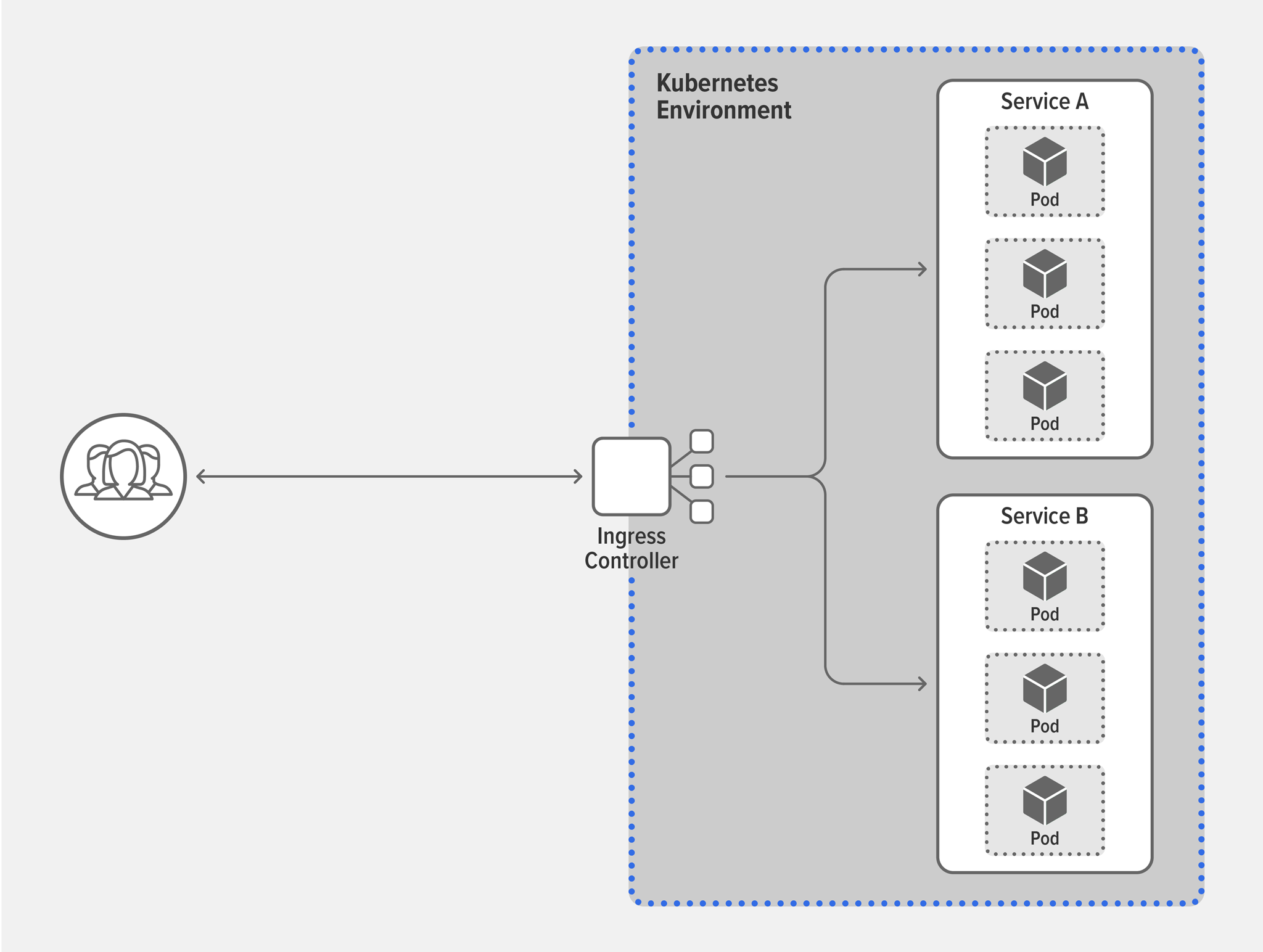

This is accomplished with an Ingress controller, which is a specialized load balancer that abstracts away the complexity of Kubernetes networking and bridges between services in a Kubernetes cluster and those outside it. This component becomes production‑grade when it includes features that increase resiliency (for example advanced health checks and Prometheus metrics), enable rapid scalability (dynamic reconfiguration), and support self‑service (role‑based access control [RBAC]).Built‑in security to protect against threats throughout the cluster

While “coarse‑grained” security might be sufficient outside the cluster, “fine‑grained” security is required inside it. Depending on the complexity of your cluster, there are three locations where you may need to deploy a flexible web application firewall (WAF): on the Ingress controller, as a per‑service proxy, and as a per‑pod proxy. This flexibility lets you apply stricter controls to sensitive apps – such as billing – and looser controls where risk is lower.A scalable east‑west traffic tier to optimize traffic within the cluster

This third component is needed once your Kubernetes applications have grown beyond the level of complexity and scale that basic tools can handle. At this stage, you need a service mesh, which is an orchestration tool that provides even finer‑grained traffic management and security to application services within the cluster. A service mesh is typically responsible for managing application routing between containerized applications, providing and enforcing autonomous service-to-service mutual TLS (mTLS) policies, and providing visibility into application availability and security.

When selecting these components, prioritize portability and visibility. Platform‑agnostic components reduce complexity and improve security, with fewer tools for your teams to learn and secure and easier shifting of workloads based on your business needs. The importance of visibility and monitoring is hard to overstate. Integrations with popular tools like Grafana and Prometheus create a unified “single pane of glass” view of your infrastructure, ensuring your team detects problems before they’re discovered by your customers. In addition, there are other complementary technologies that aren’t necessarily required for production‑grade Kubernetes but are integral parts of modern app development. For example, when organizations are ready to modernize traditional apps, one of the first steps is building microservices with an API gateway.

How NGINX Can Help

Our Kubernetes solutions are platform‑agnostic and include the three components you need to enable production‑grade Kubernetes: NGINX Ingress Controller as the ingress‑egress tier, NGINX App Protect as the WAF, and NGINX Service Mesh as the east‑west tier.

These solutions can make Kubernetes your best friend by enabling you in four key areas:

- Automation – Get your apps to market faster, and safer

Deploy, scale, secure, and update apps using NGINX Ingress Controller’s traffic routing and app onboarding capabilities paired with NGINX Service Mesh’s automatic deployment of NGINX Plus sidecars. - Security – Protect your customers and business from existing and emergent threats

Reduce potential points of failure by deploying NGINX App Protect anywhere in the cluster while using NGINX Service Mesh and NGINX Ingress Controller to govern and enforce end-to-end encryption between services. - Performance – Deliver the digital experiences your customers and users expect

Effortlessly handle traffic spikes and security threats without compromising performance with NGINX solutions that outperform other WAFs, Ingress controllers, and load balancers. - Insight – Evolve your business and better serve customers

Get targeted insights into app performance and availability from NGINX Ingress Controller and NGINX Service Mesh, with deep traces to understand how requests are processed across your microservices apps.

Getting Production-Ready with NGINX

NGINX Ingress Controller is available as a 30‑day free trial, which includes NGINX App Protect to secure your containerized apps. We recommend adding the always‑free NGINX Service Mesh (available for download at f5.com) to get the most out of your trial. Today you can bring your own license (BYOL) to the cloud of your choice.

"This blog post may reference products that are no longer available and/or no longer supported. For the most current information about available F5 NGINX products and solutions, explore our NGINX product family. NGINX is now part of F5. All previous NGINX.com links will redirect to similar NGINX content on F5.com."